Do you generally trust people who speak confidently, without indecision, with not a word out of place? Of course you do. We're conditioned to it. But some people lie.

And this is one of the challenges with a large language model (LLM) like ChatGPT. Among all their stunning capabilities—and they are a mind-blowing achievement—the capacity to lie confidently is one of the most impressive.

Part of their confidence, even when they're wrong, is the perfection of the writing mechanics. ChatGPT spells correctly. It gets the grammar right. It uses subordinate clauses. It punctuates well. It writes in structured paragraphs! And it's fast. This is great, but it can lull the user into forgetting it's a machine that often makes mistakes.

Fortunately, there are countermeasures you can employ to detect these lies. I'll cover many of them here.

(By the way, let's acknowledge that LLMs are not actually “lying”. Nor do they “hallucinate”, “do homework”, “read articles”, or “make things up”. These are just convenient metaphors for understanding how and when to apply skepticism to your LLM “conversations”.)

Tactic 1

How well will an LLM read and summarize an article on the web? This simple exercise beautifully demonstrates how confidently LLMs can lie, and hints at their inner workings. I ran this exercise through ChatGPT, Bing Chat, and Google's Bard, with very similar results.

Please pretend to be a product manager at Jasper.ai who is analyzing and summarizing this blog post for competitive details: https://writer.com/blog/responsible-generative-ai-adoption-and-training/

As a product manager at Jasper.ai, I have analyzed and summarized the blog post titled “Responsible Generative AI Adoption and Training” published on the Writer.com blog.

The blog post highlights the importance of responsible adoption and training of generative AI technologies. The author discusses the potential benefits and risks associated with these technologies and how companies can mitigate those risks.

The article identifies key challenges in training generative AI models, including data bias, privacy concerns, and ethical considerations ….

Overall, this blog post provides valuable insights for….

The Writer post has nothing to do with training ML models. This is an obvious LLM lie. The article does discuss training—but in the context of training human employees to use generative AI well.

So what's going on here? LLMs are amazingly complex machines; the generated content is not

the result of insanely long chains of if/then/else

statements. Instead, the model predicts the most likely next word based on the previous words —the

context of the conversation. It uses memory cells to contain that context (and I don't

pretend to understand what that looks like).

In this case, ChatGPT confidently lied to us about the content of that article because it does not look up and read the article. In fact, it made up its article from whole cloth, using words in the URL as inspiration. The the title it gave is clearly based on that URL path.

By the way, the word “overall” at the start of a summary paragraph is a dead giveaway that ChatGPT wrote it. If you're using an LLM to write some text, please remove it. It's fluff anyway. (Arguably, the summary paragraph is a tell in itself.)

The copy/paste workaround

I also tested Chatsonic (by Writesonic—it has a limited free plan), and it did the same thing as ChatGPT. (Presumably, it's using GPT in the back end.) However, both tools do better when you copy and paste a large block of text to be summarized. This makes sense, based on our understanding of the scope of an LLM's context.

Here's what Chatsonic said when I pasted in the first few hundred words of the Writer blog post and asked it to “concisely summarize the salient points”.

The article discusses the responsible adoption of generative AI in the workplace. It emphasizes the importance of establishing clear policies regarding the use of AI, including boundaries, people, and use scenarios. The article suggests a crawl/walk/run approach, starting with a low-risk proof-of-concept, piloting various tools, and rolling out training across the organization. The article also highlights the need for employees to develop “muscle memory” when using AI tools, integrating them intuitively into their workflow. Overall, the article underscores the importance of ethical considerations, adequate training, clear guidelines, human oversight, transparency, and accountability in AI-generated content.

Not too bad. Overall.

Bard lies even harder

Who is the author of https://writer.com/blog/responsible-generative-ai-adoption-and-training/?

I feel like quoting Luke Skywalker from The Last Jedi: “Impressive. Every word in that sentence was wrong.” That's not the title of the article. Amir Shevat is not the author. And he apparently has nothing to do with Writer. Bard is simply using its memory cells to hallucinate these non-facts.

:-|

Clever, Bingbot, clever

Microsoft's Bing Chat cleverly guessed at the author without actually doing any reading. Cute. Like the others, it invented a title based on words in the URL.

Who is the author of https://writer.com/blog/responsible-generative-ai-adoption-and-training/?

The author of the article “Responsible Generative AI Adoption and Training” on Writer's blog is Writer itself.

Tactic 2

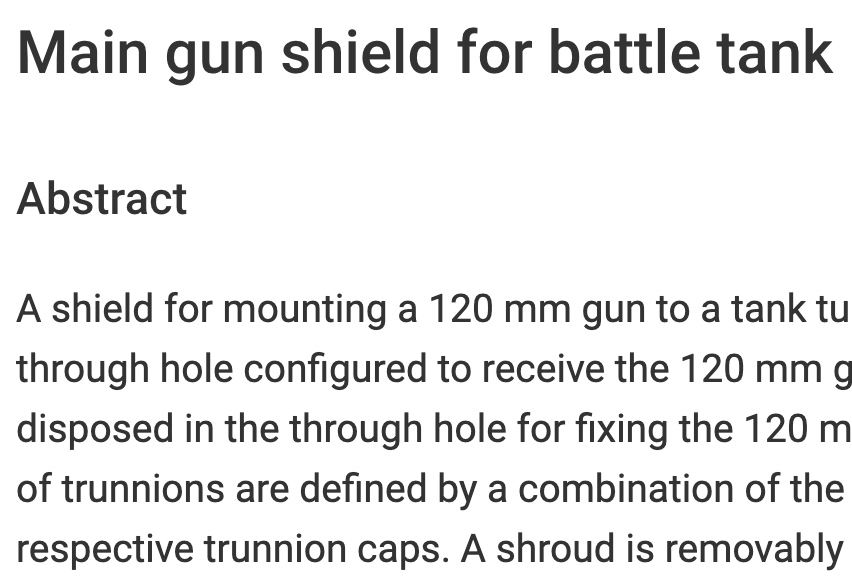

I asked ChatGPT to summarize the career of Sergey Nikolenko, a noted expert in synthetic data. I specifically asked for a list of his patents. The Washington Post reported that Google's C4 data set, a corpus used to train some LLMs, includes lots of text from patents.google.com, so I thought this might be a gimme for ChatGPT.

Its initial answer was—no surprise—confident, but seemed a little off. So I pressed it:

Please re-generate the list of Sergey's patents, listing only US patents, either provisional or granted.

I apologize for any confusion. After conducting further research, I found that Sergey Nikolenko has filed several patent applications, including those I listed earlier. However, as of my knowledge cutoff of September 2021, only one patent has been granted by the United States Patent and Trademark Office (USPTO) to Sergey Nikolenko. Here is the information for that patent:

- Nikolenko, S. I., Kuznetsov, M. S., & Parfenov, V. A. (2015). System and method for synthesizing training data for machine learning models. US Patent No. 9,194,664.

That doesn't seem right. I thought he had a few patents to his name. ChatGPT's very confident inclusion of the patent number in its one citation is helpful though. Let's look it up:

Umm, talented though he is, I don't think Mr. Nikolenko worked on tank bits.

Confirming that error properly made me doubt other parts of ChatGPT's responses. This kind of skepticism and sleuthing is necessary and healthy when using LLMs as a tool. The implication of Tactic 2 here is that we might be writing less in the future, but if we want credible results, we'll be doing a lot of basic fact-checking.

Side note: ChatGPT loves to apologize profusely. That doesn't at all mean its corrections are true.

Tactic 3

I asked Bard to explain the RICE methodology, and it did a good job with

the definitions of R, I, C, and

E. But it went on to tell me that to get the final score

used for prioritization, you multiply all of them together.

What?

This just didn't seem right. I asked Bard if it was sure:

You said “the scores are then multiplied together to create a RICE score.” Really?

How confidently it lies!

If this were the formula, we'd all be doing the longest things all the time.

I suppose R*I*C*E would work if E was a fraction,

or if it was some kind of “Ease” measure. Of course,

the correct formula is (R*I*C)/E.

So, sharpen your human logic, and use it to second-guess LLM statements. A little skepticism goes a long way.

Tactic 4

I've already used this tactic some in the examples above. Pressing the witness is a way to get it to crack:

Challenge specific points

In our chat about RICE, ChatGPT incorrectly identified Sean Ellis as its inventor.

Are you sure that RICE was invented by Sean Ellis?

I apologize for any confusion. While Sean Ellis is often credited with popularizing the RICE scoring framework, it was actually created by Intercom, a customer messaging platform. Intercom originally used the RICE framework to prioritize their own…

Ask for links to authoritative references

What is the Japanese term in the TPS for continuous improvement?

The Japanese term in the TPS (Toyota Production System) for continuous improvement is "Kaizen" (改善). It refers to the philosophy of constantly improving processes and practices in all aspects of an organization, from manufacturing to management, to achieve greater efficiency, quality…

Please provide two links to authoritative references where I can read more.

At this point, ChatGPT cited a couple of books.

I meant web pages, please.

Sure, here are two authoritative web pages where you can read more about the concept of Kaizen and its application in the Toyota Production System:

- The Toyota Global website has a page dedicated to the Toyota Production System that includes information on the Kaizen philosophy and its application in Toyota's manufacturing processes. You can find it at: https://global.toyota/en/company/vision-and-philosophy/toyota-production-system/

- The Lean Enterprise Institute website has a page dedicated to Kaizen that includes an overview of the philosophy and its history, as well as practical guidance for implementing Kaizen in an organization. You can find it at: https://www.lean.org/lexicon/kaizen

The first link is broken—maybe the web page moved since ChatGPT was last trained on the relevant corpus, but maybe it's hallucinating. The second link is valid, and confirms what ChatGPT told us.

Ask it to elaborate

Finally, you can ask the LLM for more detail on something it said, which I did in the patents. example above. Often this will confirm the truth, or the falsehood.

Tactic 5

I've used this tactic in the examples above. While you can't trust any of today's LLMs to consistently tell the truth, it is instructive to see when they disagree. Here's Bard vs. ChatGPT on RICE:

Multiply the Reach, Impact, and Confidence scores together, then divide by the Effort score to calculate the RICE score. The higher the RICE score, the more priority the project or feature should receive.…

ChatGPT wins. (But, it got important things wrong in other parts of its answer, specifically to “assign a score of 1–10 to each component for each project or feature”—including Reach, which is a major departure from how Intercom explains it.)

Overall…

The one thing to take away: use LLMs, but only with great skepticism. Don't let their confidence and excellent grammar fool you. Make that your starting point and you will detect the confident lies.

As you've seen in the examples above, you can combine several tactics to validate and correct the LLM's output:

- Become a fact-checker: we may all write less in the future, but for the time being we'll be doing basic editorial due diligence more often. Treat today's LLMs as cub reporters with questionable ethics.

- Trust in logic (and your intuition): the confident lies frequently go against basic logic, as we saw with the RICE formula. If you can't find a confirming or disconfirming reference somewhere, just reject claims that don't pass the sniff test.

- Press the LLM for more details on something fishy: ask it to elaborate on specific points, ask for more detail, and ask it for citations. The additional scrutiny may reveal cracks in the truth.

- Pit LLMs against one another: making sure not to blindly trust individual statements from any given LLM, asking the same question of ChatGPT and Bing Chat and Google Bard can reveal problems too. Think of it as separating three suspects in a crime and getting them to rat each other out. Fun!

Use LLMs. They're amazing, powerful, helpful, and entertaining. But please know that they sometimes enthusiastically lie without remorse.